- 제목

- Rigid and non-rigid motion artifact reduction in X-ray CT using attention module (심현정, 백종덕 교수님 연구실)

- 작성일

- 2021.05.14

- 작성자

- 글로벌융합공학부

- 게시글 내용

-

Rigid and non-rigid motion artifact reduction in X-ray CT using attention module

Medical Image Analysis (MEDIA), 2021. (JCR 상위 1%)

Y Ko, S Moon, J Baek, H Shim

Abstract:

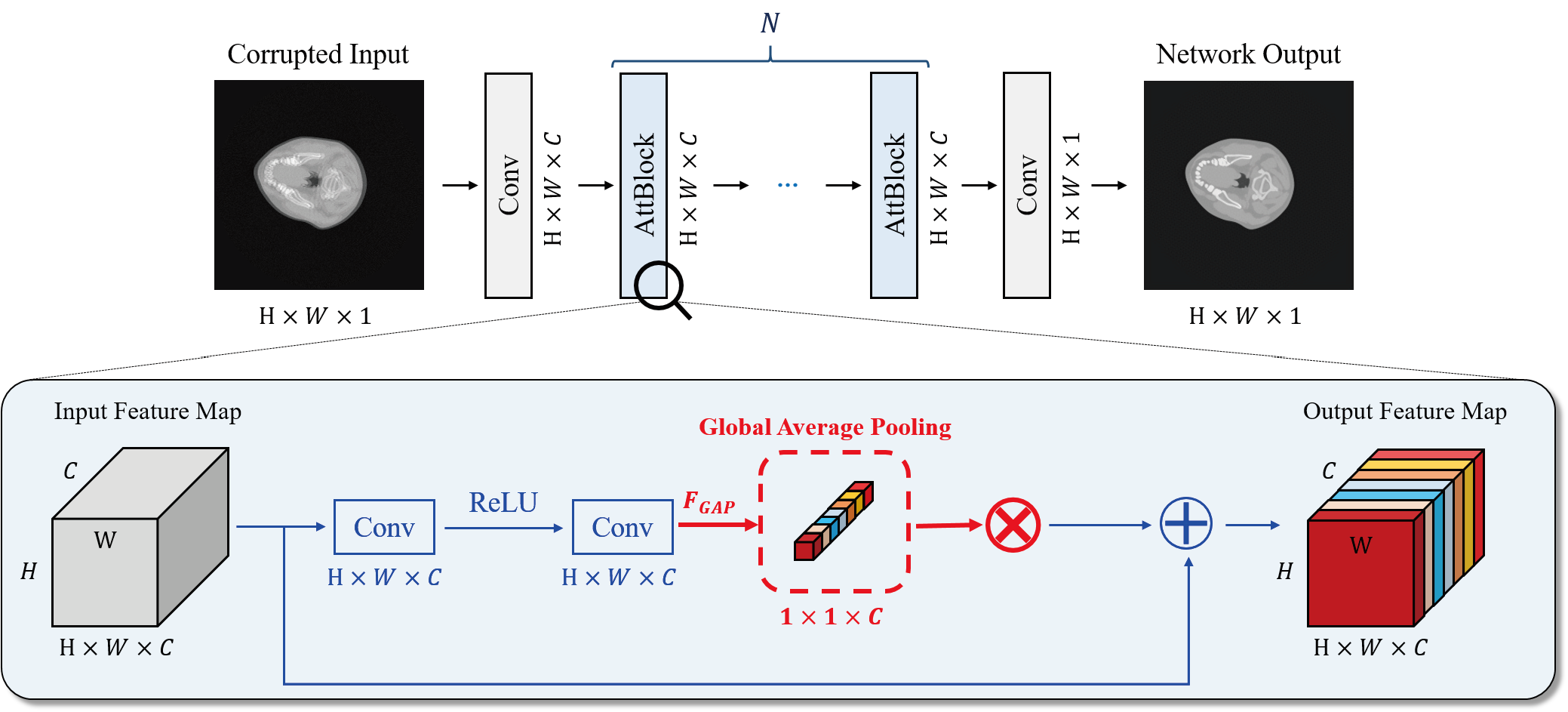

Motion artifacts are a major factor that can degrade the diagnostic performance of computed tomography (CT) images. In particular, the motion artifacts become considerably more severe when an imaging system requires a long scan time such as in dental CT or cone-beam CT (CBCT) applications, where patients generate rigid and non-rigid motions. To address this problem, we proposed a new real-time technique for motion artifacts reduction that utilizes a deep residual network with an attention module. Our attention module was designed to increase the model capacity by amplifying or attenuating the residual features according to their importance. We trained and evaluated the network by creating four benchmark datasets with rigid motions or with both rigid and non-rigid motions under a step-and-shoot fan-beam CT (FBCT) or a CBCT. Each dataset provided a set of motion-corrupted CT images and their ground-truth CT image pairs.

The strong modeling power of the proposed network model allowed us to successfully handle motion artifacts from the two CT systems under various motion scenarios in real-time. As a result, the proposed model demonstrated clear performance benefits. In addition, we compared our model with Wasserstein generative adversarial network (WGAN)-based models and a deep residual network (DRN)-based model, which are one of the most powerful techniques for CT denoising and natural RGB image deblurring, respectively. Based on the extensive analysis and comparisons using four benchmark datasets, we confirmed that our model outperformed the aforementioned competitors. Our benchmark datasets and implementation code are available at https://github.com/youngjun-ko/ct\_mar\_attention.